Job to update link tables for rerendered wiki pages. More...

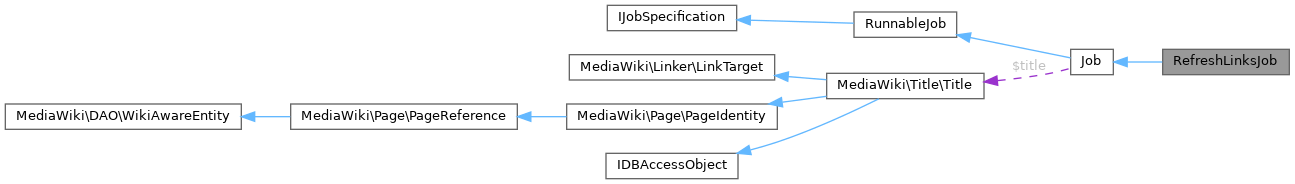

Inherits Job.

Public Member Functions | ||||

| __construct (PageIdentity $page, array $params) | ||||

| getDeduplicationInfo () | ||||

| Subclasses may need to override this to make duplication detection work. | ||||

| run () | ||||

| Run the job. | ||||

| workItemCount () | ||||

Public Member Functions inherited from Job Public Member Functions inherited from Job | ||||

| __construct ( $command, $params=null) | ||||

| allowRetries () | ||||

| ||||

| getLastError () | ||||

| ||||

| getMetadata ( $field=null) | ||||

| getParams () | ||||

| ||||

| getQueuedTimestamp () | ||||

| getReadyTimestamp () | ||||

| ||||

| getReleaseTimestamp () | ||||

| getRequestId () | ||||

| ||||

| getRootJobParams () | ||||

| getTitle () | ||||

| getType () | ||||

| ||||

| hasExecutionFlag ( $flag) | ||||

| ||||

| hasRootJobParams () | ||||

| ignoreDuplicates () | ||||

| Whether the queue should reject insertion of this job if a duplicate exists. | ||||

| isRootJob () | ||||

| setMetadata ( $field, $value) | ||||

| teardown ( $status) | ||||

| toString () | ||||

| ||||

Public Member Functions inherited from RunnableJob Public Member Functions inherited from RunnableJob | ||||

| tearDown ( $status) | ||||

| Do any final cleanup after run(), deferred updates, and all DB commits happen. | ||||

Static Public Member Functions | |

| static | newDynamic (PageIdentity $page, array $params) |

| static | newPrioritized (PageIdentity $page, array $params) |

Static Public Member Functions inherited from Job Static Public Member Functions inherited from Job | |

| static | factory ( $command, $params=[]) |

| Create the appropriate object to handle a specific job. | |

| static | newRootJobParams ( $key) |

| Get "root job" parameters for a task. | |

Protected Member Functions | |

| runForTitle (PageIdentity $pageIdentity) | |

Protected Member Functions inherited from Job Protected Member Functions inherited from Job | |

| addTeardownCallback ( $callback) | |

| setLastError ( $error) | |

Additional Inherited Members | |

Public Attributes inherited from Job Public Attributes inherited from Job | |

| string | $command |

| array | $metadata = [] |

| Additional queue metadata. | |

| array | $params |

| Array of job parameters. | |

Protected Attributes inherited from Job Protected Attributes inherited from Job | |

| string | $error |

| Text for error that occurred last. | |

| int | $executionFlags = 0 |

| Bitfield of JOB_* class constants. | |

| bool | $removeDuplicates = false |

| Expensive jobs may set this to true. | |

| callable[] | $teardownCallbacks = [] |

| Title | $title |

Detailed Description

Job to update link tables for rerendered wiki pages.

This job comes in a few variants:

- a) Recursive jobs to update links for backlink pages for a given title. These jobs have (recursive:true,table:

set. - b) Jobs to update links for a set of pages (the job title is ignored). These jobs have (pages:(<page ID>:(<namespace>,<title>),...) set.

- c) Jobs to update links for a single page (the job title) These jobs need no extra fields set.

Metrics:

refreshlinks_warning.<warning>: A recoverable issue. The job will continue as normal.refreshlinks_outcome.<reason>: If the job ends with an unusual outcome, it will increment this exactly once. The reason starts withbad_, a failure is logged and the job may be retried later. The reason starts withgood_, the job was cancelled and considered a success, i.e. it was superseded.

Definition at line 57 of file RefreshLinksJob.php.

Constructor & Destructor Documentation

◆ __construct()

| RefreshLinksJob::__construct | ( | PageIdentity | $page, |

| array | $params ) |

Definition at line 63 of file RefreshLinksJob.php.

References MediaWiki\Page\PageIdentity\canExist().

Member Function Documentation

◆ getDeduplicationInfo()

| RefreshLinksJob::getDeduplicationInfo | ( | ) |

Subclasses may need to override this to make duplication detection work.

The resulting map conveys everything that makes the job unique. This is only checked if ignoreDuplicates() returns true, meaning that duplicate jobs are supposed to be ignored.

- Stability: stable

- to override

- Returns

- array Map of key/values

- Since

- 1.21

Reimplemented from Job.

Definition at line 454 of file RefreshLinksJob.php.

◆ newDynamic()

|

static |

- Parameters

-

PageIdentity $page array $params

- Returns

- RefreshLinksJob

Definition at line 104 of file RefreshLinksJob.php.

References $job.

◆ newPrioritized()

|

static |

- Parameters

-

PageIdentity $page array $params

- Returns

- RefreshLinksJob

Definition at line 92 of file RefreshLinksJob.php.

References $job.

Referenced by MediaWiki\Deferred\LinksUpdate\LinksUpdate\queueRecursiveJobs().

◆ run()

| RefreshLinksJob::run | ( | ) |

Run the job.

- Returns

- bool Success

Implements RunnableJob.

Definition at line 111 of file RefreshLinksJob.php.

References $title, Job\getRootJobParams(), BacklinkJobUtils\partitionBacklinkJob(), runForTitle(), and Job\setLastError().

◆ runForTitle()

|

protected |

- Parameters

-

PageIdentity $pageIdentity

- Returns

- bool

Definition at line 172 of file RefreshLinksJob.php.

References Job\setLastError().

Referenced by run().

◆ workItemCount()

| RefreshLinksJob::workItemCount | ( | ) |

- Stability: stable

- to override

- Returns

- int

Reimplemented from Job.

Definition at line 470 of file RefreshLinksJob.php.

The documentation for this class was generated from the following file:

- includes/jobqueue/jobs/RefreshLinksJob.php