Job to purge the cache for all pages that link to or use another page or file. More...

Public Member Functions | |

| __construct (Title $title, array $params) | |

| run () | |

| Run the job. | |

| workItemCount () | |

Public Member Functions inherited from Job Public Member Functions inherited from Job | |

| __construct ( $command, $title, $params=false) | |

| allowRetries () | |

| getDeduplicationInfo () | |

| Subclasses may need to override this to make duplication detection work. | |

| getLastError () | |

| getParams () | |

| getQueuedTimestamp () | |

| getReadyTimestamp () | |

| getReleaseTimestamp () | |

| getRequestId () | |

| getRootJobParams () | |

| getTitle () | |

| getType () | |

| hasRootJobParams () | |

| ignoreDuplicates () | |

| Whether the queue should reject insertion of this job if a duplicate exists. | |

| insert () | |

| Insert a single job into the queue. | |

| isRootJob () | |

| teardown ( $status) | |

| Do any final cleanup after run(), deferred updates, and all DB commits happen. | |

| toString () | |

Static Public Member Functions | |

| static | newForBacklinks (Title $title, $table) |

Static Public Member Functions inherited from Job Static Public Member Functions inherited from Job | |

| static | batchInsert ( $jobs) |

| Batch-insert a group of jobs into the queue. | |

| static | factory ( $command, Title $title, $params=[]) |

| Create the appropriate object to handle a specific job. | |

| static | newRootJobParams ( $key) |

| Get "root job" parameters for a task. | |

Protected Member Functions | |

| invalidateTitles (array $pages) | |

Protected Member Functions inherited from Job Protected Member Functions inherited from Job | |

| addTeardownCallback ( $callback) | |

| setLastError ( $error) | |

Additional Inherited Members | |

Public Attributes inherited from Job Public Attributes inherited from Job | |

| string | $command |

| array | $metadata = [] |

| Additional queue metadata. | |

| array | $params |

| Array of job parameters. | |

Protected Attributes inherited from Job Protected Attributes inherited from Job | |

| string | $error |

| Text for error that occurred last. | |

| bool | $removeDuplicates |

| Expensive jobs may set this to true. | |

| callable[] | $teardownCallbacks = [] |

| Title | $title |

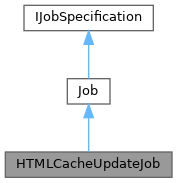

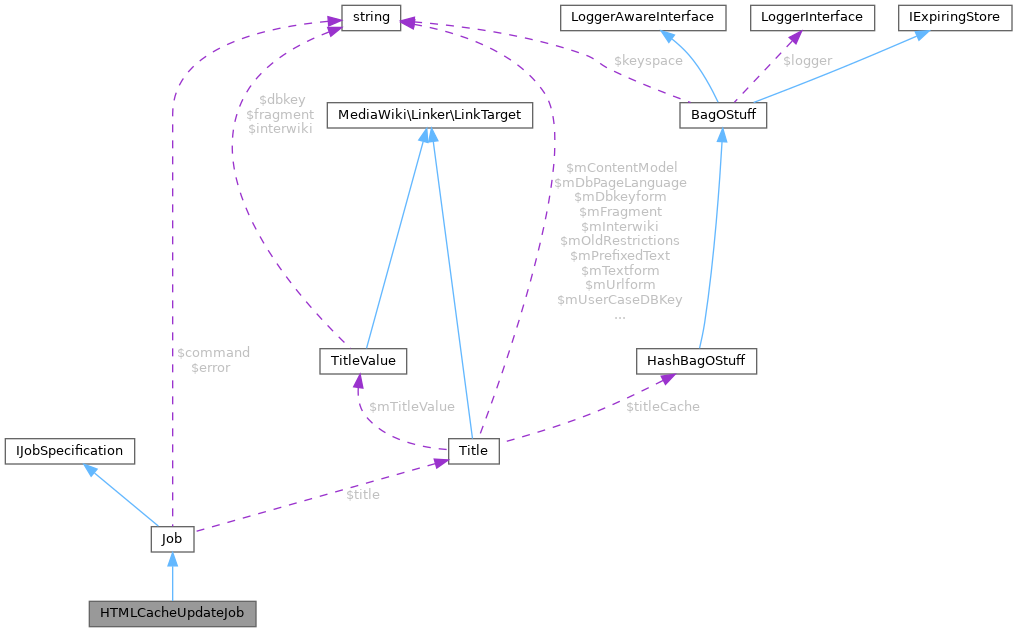

Detailed Description

Job to purge the cache for all pages that link to or use another page or file.

This job comes in a few variants:

- a) Recursive jobs to purge caches for backlink pages for a given title. These jobs have (recursive:true,table:

set. - b) Jobs to purge caches for a set of titles (the job title is ignored). These jobs have (pages:(<page ID>:(<namespace>,<title>),...) set.

Definition at line 36 of file HTMLCacheUpdateJob.php.

Constructor & Destructor Documentation

◆ __construct()

Definition at line 37 of file HTMLCacheUpdateJob.php.

References Job\$params, and Job\$title.

Member Function Documentation

◆ invalidateTitles()

|

protected |

- Parameters

-

array $pages Map of (page ID => (namespace, DB key)) entries

Definition at line 96 of file HTMLCacheUpdateJob.php.

References $batch, Job\$title, $wgUpdateRowsPerQuery, $wgUseFileCache, as, HTMLFileCache\clearFileCache(), DB_MASTER, global, TitleArray\newFromResult(), CdnCacheUpdate\newFromTitles(), wfGetDB(), wfGetLBFactory(), and wfTimestampNow().

Referenced by run().

◆ newForBacklinks()

|

static |

- Returns

- HTMLCacheUpdateJob

Definition at line 48 of file HTMLCacheUpdateJob.php.

References Job\$title, Job\newRootJobParams(), and true.

Referenced by HTMLCacheUpdate\doUpdate().

◆ run()

| HTMLCacheUpdateJob::run | ( | ) |

Run the job.

- Returns

- bool Success

Reimplemented from Job.

Definition at line 60 of file HTMLCacheUpdateJob.php.

References $t, Job\$title, $wgUpdateRowsPerJob, $wgUpdateRowsPerQuery, Job\getRootJobParams(), global, invalidateTitles(), BacklinkJobUtils\partitionBacklinkJob(), and JobQueueGroup\singleton().

◆ workItemCount()

| HTMLCacheUpdateJob::workItemCount | ( | ) |

- Returns

- int Number of actually "work items" handled in this job

- See also

- $wgJobBackoffThrottling

- Since

- 1.23

Reimplemented from Job.

Definition at line 152 of file HTMLCacheUpdateJob.php.

The documentation for this class was generated from the following file:

- includes/jobqueue/jobs/HTMLCacheUpdateJob.php