Job to update link tables for rerendered wiki pages. More...

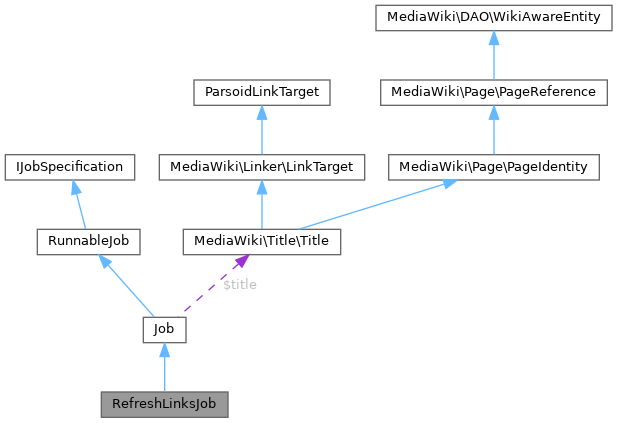

Inherits Job.

Public Member Functions | ||||

| __construct (PageIdentity $page, array $params) | ||||

| getDeduplicationInfo () | ||||

| Subclasses may need to override this to make duplication detection work. | ||||

| run () | ||||

| Run the job. | ||||

| workItemCount () | ||||

Public Member Functions inherited from Job Public Member Functions inherited from Job | ||||

| __construct ( $command, $params=null) | ||||

| allowRetries () | ||||

| ||||

| getLastError () | ||||

| ||||

| getMetadata ( $field=null) | ||||

| getParams () | ||||

| ||||

| getQueuedTimestamp () | ||||

| getReadyTimestamp () | ||||

| ||||

| getReleaseTimestamp () | ||||

| getRequestId () | ||||

| ||||

| getRootJobParams () | ||||

| getTitle () | ||||

| getType () | ||||

| ||||

| hasExecutionFlag ( $flag) | ||||

| ||||

| hasRootJobParams () | ||||

| ignoreDuplicates () | ||||

| Whether the queue should reject insertion of this job if a duplicate exists. | ||||

| isRootJob () | ||||

| setMetadata ( $field, $value) | ||||

| teardown ( $status) | ||||

| toString () | ||||

| ||||

Public Member Functions inherited from RunnableJob Public Member Functions inherited from RunnableJob | ||||

| tearDown ( $status) | ||||

| Do any final cleanup after run(), deferred updates, and all DB commits happen. | ||||

Static Public Member Functions | |

| static | newDynamic (PageIdentity $page, array $params) |

| static | newPrioritized (PageIdentity $page, array $params) |

Static Public Member Functions inherited from Job Static Public Member Functions inherited from Job | |

| static | factory ( $command, $params=[]) |

| Create the appropriate object to handle a specific job. | |

| static | newRootJobParams ( $key) |

| Get "root job" parameters for a task. | |

Protected Member Functions | |

| runForTitle (PageIdentity $pageIdentity) | |

Protected Member Functions inherited from Job Protected Member Functions inherited from Job | |

| addTeardownCallback ( $callback) | |

| setLastError ( $error) | |

Additional Inherited Members | |

Public Attributes inherited from Job Public Attributes inherited from Job | |

| string | $command |

| array | $metadata = [] |

| Additional queue metadata. | |

| array | $params |

| Array of job parameters. | |

Protected Attributes inherited from Job Protected Attributes inherited from Job | |

| string | $error |

| Text for error that occurred last. | |

| int | $executionFlags = 0 |

| Bitfield of JOB_* class constants. | |

| bool | $removeDuplicates = false |

| Expensive jobs may set this to true. | |

| callable[] | $teardownCallbacks = [] |

| Title | $title |

Detailed Description

Job to update link tables for rerendered wiki pages.

This job comes in a few variants:

- a) Recursive jobs to update links for backlink pages for a given title. Scheduled by {

- See also

- LinksUpdate::queueRecursiveJobsForTable()}; used to refresh pages which link/transclude a given title. These jobs have (recursive:true,table:

set. They just look up which pages link to the job title and schedule them as a set of non-recursive RefreshLinksJob jobs (and possible one new recursive job as a way of continuation).

- b) Jobs to update links for a set of pages (the job title is ignored). These jobs have (pages:(<page ID>:(<namespace>,<title>),...) set.

- c) Jobs to update links for a single page (the job title). These jobs need no extra fields set.

Job parameters for all jobs:

- recursive (bool): When false, updates the current page. When true, updates the pages which link/transclude the current page.

- triggeringRevisionId (int): The revision of the edit which caused the link refresh. For manually triggered updates, the last revision of the page (at the time of scheduling).

- triggeringUser (array): The user who triggered the refresh, in the form of a [ 'userId' => int, 'userName' => string ] array. This is not necessarily the user who created the revision.

- triggeredRecursive (bool): Set on all jobs which were partitioned from another, recursive job. For debugging.

- Standard deduplication params (see {

- See also

- JobQueue::deduplicateRootJob()}). For recursive jobs:

- table (string): Which table to use (imagelinks or templatelinks) when searching for affected pages.

- range (array): Used for recursive jobs when some pages have already been partitioned into separate jobs. Contains the list of ranges that still need to be partitioned. See {

- See also

- BacklinkJobUtils::partitionBacklinkJob()}.

- division: Number of times the job was partitioned already (for debugging). For non-recursive jobs:

- pages (array): Associative array of [ <page ID> => [ <namespace>, <dbkey> ] ]. Might be omitted, then the job title will be used.

- isOpportunistic (bool): Set for opportunistic single-page updates. These are "free" updates that are queued when most of the work needed to be performed anyway for non-linkrefresh-related reasons, and can be more easily discarded if they don't seem useful. See {

- See also

- WikiPage::triggerOpportunisticLinksUpdate()}.

- useRecursiveLinksUpdate (bool): When true, triggers recursive jobs for each page.

Metrics:

refreshlinks_superseded_updates_total: The number of times the job was cancelled because the target page had already been refreshed by a different edit or job. The job is considered to have succeeded in this case.refreshlinks_warnings_total: The number of times the job failed due to a recoverable issue. Possiblereasonlabel values include:lag_wait_failed: The job timed out while waiting for replication.

refreshlinks_failures_total: The number of times the job failed. Thereasonlabel may be:page_not_found: The target page did not exist.rev_not_current: The target revision was no longer the latest revision for the target page.rev_not_found: The target revision was not found.lock_failure: The job failed to acquire an exclusive lock to refresh the target page.

refreshlinks_parsercache_operations_total: The number of times the job attempted to fetch parser output from the parser cache. Possiblestatuslabel values include:cache_hit: The parser output was found in the cache.cache_miss: The parser output was not found in the cache.

- See also

- RefreshSecondaryDataUpdate

- WikiPage::doSecondaryDataUpdates()

Definition at line 106 of file RefreshLinksJob.php.

Constructor & Destructor Documentation

◆ __construct()

| RefreshLinksJob::__construct | ( | PageIdentity | $page, |

| array | $params ) |

Definition at line 112 of file RefreshLinksJob.php.

References $params, and MediaWiki\Page\PageIdentity\canExist().

Member Function Documentation

◆ getDeduplicationInfo()

| RefreshLinksJob::getDeduplicationInfo | ( | ) |

Subclasses may need to override this to make duplication detection work.

The resulting map conveys everything that makes the job unique. This is only checked if ignoreDuplicates() returns true, meaning that duplicate jobs are supposed to be ignored.

- Stability: stable

- to override

- Returns

- array Map of key/values

- Since

- 1.21

Reimplemented from Job.

Definition at line 570 of file RefreshLinksJob.php.

◆ newDynamic()

|

static |

- Parameters

-

PageIdentity $page array $params

- Returns

- RefreshLinksJob

Definition at line 153 of file RefreshLinksJob.php.

◆ newPrioritized()

|

static |

- Parameters

-

PageIdentity $page array $params

- Returns

- RefreshLinksJob

Definition at line 141 of file RefreshLinksJob.php.

Referenced by MediaWiki\Deferred\LinksUpdate\LinksUpdate\queueRecursiveJobs().

◆ run()

| RefreshLinksJob::run | ( | ) |

Run the job.

- Returns

- bool Success

Implements RunnableJob.

Definition at line 160 of file RefreshLinksJob.php.

References Job\$title, MediaWiki\Title\Title\canExist(), Job\getRootJobParams(), BacklinkJobUtils\partitionBacklinkJob(), runForTitle(), and setLastError().

◆ runForTitle()

|

protected |

- Parameters

-

PageIdentity $pageIdentity

- Returns

- bool

Definition at line 223 of file RefreshLinksJob.php.

References setLastError().

Referenced by run().

◆ workItemCount()

| RefreshLinksJob::workItemCount | ( | ) |

- Stability: stable

- to override

- Returns

- int

Reimplemented from Job.

Definition at line 586 of file RefreshLinksJob.php.

The documentation for this class was generated from the following file:

- includes/jobqueue/jobs/RefreshLinksJob.php